I wanted to add more fuel to the fire regarding AI. In my initial blog, Blog #5, AI Artificial Intelligence, on October 2, 2023, I talked about the current state of AI technology, especially the programs available to businesses and individuals (like ChatGPT). I pointed out that the tech is in the beginning stages. Many proclaim how great it is, but a lot of that is because it is so new, and we have read about it in scifi. I have used some of ChatGPT when I corrected my grammar and sentence structure. I found that you need a human being to oversee the result. Sometimes, it doesn’t understand and presents ideas and interpretations that don’t fit the narrative. The idea that you could use it to write school papers, blogs, and other informational material, as well as creative writing, seems ludicrous. Still, we live in a time when people look for shortcuts and can disregard the problem the program creates with the product’s poor quality or the ethics of publishing it uncorrected. This explains why some of the resulting pieces are so flawed. I also pointed out that an insufficient database size is used as the foundation for these systems, determining their performance and whether intentional fraud is perpetrated. Old human stuff.

You might recall a kerfluffle about a software engineer who blew the whistle on Google’s AI LaMDA (Language Model for Dialogue Applications). Blake Lemoine claimed the program was thinking and reasoning like a human being. Mr. Lemoine is convinced the program is “sentient, with a perception of, and ability to express thoughts and feelings equivalent to a human child1.” This is reminiscent of the novel by Robert A. Heinlein titled The Moon is a Harsh Mistress2. In the story, a computer repairman lives on the moon and gets involved in the moon colony’s revolt against Earth. The story shows sentiency is achieved in the moon computers when enough connections are made. This could explain sentience in humans because we have trillions of connections, and you could infer that is how sentience is achieved by comparing the number of connections with those of other animals who aren’t sentient. Mr. Lemoine, the engineer involved, was suspended and then fired from Google for violating employment and data security policies3.

Some developments in cutting-edge science have produced exciting results. Dr. Christina Theodoris, in 20174, wanted to use a new kind of AI model in research studies by Google engineers. Google researchers provided millions of sentences in English, along with their translations into German and French. This model was able to translate sentences it hadn’t seen before. After many attempts to find a lab to help her use this program to increase knowledge of a cell, she found a lab run by Shirley Liu, a computational biologist at the Dan-Farber Cancer Institute in Boston, who was willing to let her try. Dr. Theodoris pulled data from 106 published human studies, which included 30 million cells, and fed it into a program called GeneFormer. So far, the result is that the program can predict the reaction of a cell if specific procedures are done. One of the results predicted what a heart cell (called a cardiomyocyte) would do if a gene TEAD4 shuts down. The program indicated that the shutdown of the gene would severely disrupt it. The result was that the heart cells grew weaker.

The AI can draw some conclusions from the data supplied, but the proof that these are valid has primarily been based on discoveries already made. This proves the AI’s decision was correct, and those discoveries already made took years, so the AI can definitely speed things up. This is a leap computers make (at least for now): using deductive reasoning with available data to reach a conclusion. This is not to diminish Dr. Theodoris’s use, which promises pioneering discoveries in the future.

Some of the concerns of the more sophisticated tech have brought about a call for regulations so it cannot be used to create, among other things, new biological weapons. This will be a cat-and-mouse game, though. You control a new crime with a regulation, but you can only control it once it has been committed and you understand what happened. Then, the next moment, another crime is committed. The TV series Almost Human, a crime drama about a human and robot partner fighting crime in this environment, aired from 2013 to 20145. It shows this kind of constant struggle.

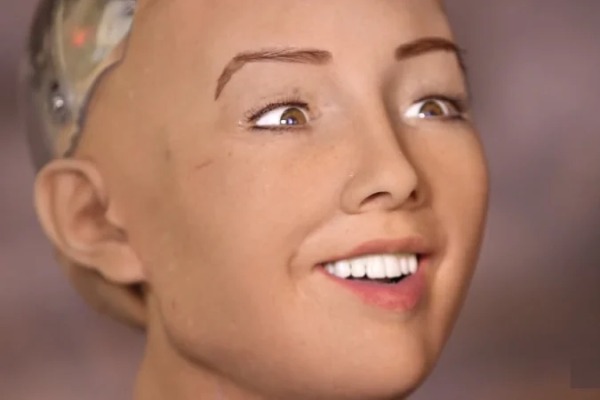

Writing this, I am reminded of the Turing Test, famously used in Blade Runner 1982 film6, which showed the policeman, played by Harrison Ford, trying to determine if a young woman was indeed a machine or a robot. The Turing Test was devised by Alan Turning in 19507. The test is supposed to show whether you are interacting with a machine or a human. Google’s AI passed the test and then showed how the test was broken8. The AI programs are designed to appear to be of human origin. In fact, it is frequently described as the ability to “fool” a person into believing the response is from a human. While it may be designed that way, the intent is to “appear” human, but the computer is not considered to be “fooling” humans as it is still a computer and unaware, or at least that is how it started out.

This is similar to how some speak of the genetics of evolutionary change in which traits exhibit some choice. In reality, mutations occur spontaneously. If it works, it stays; if it doesn’t, the creature dies. This simple test depends on the environment outside and inside the cell or body when a living creature or cell mutates or changes a gene or group of genes. Mutation is part of evolution; in other words, mutation helps organisms survive, but it is a random process. When discussing whether a computer program is aware, you should avoid speaking of emotions, especially when you don’t think the program is aware. It creates a different idea than you are trying to convey.

We are moving along (at a rapid pace) to develop computers that do things faster by having them “learn” by using advanced programs to make decisions independently. However, this is not a sign that the AI is sentient. We, as humans, are at the beginning of this journey. Humans write stories that jump into the future, guessing what could happen with that technology.

Will AIs be sentient, like Skynet9 or The Bicentennial Man10? I cannot say. However, if there truly is a path, we will find a way to create an aware computer/robot. Then we have to ask ourselves: Do we really want to?

©JM Strasser April 1, 2024 All Rights Reserved

Sources

Blog #5 AI Artificial Intelligence October 2, 2023 by JM Strasser

Footnotes

1. https://www.theguardian.com/technology/2022/jun/12/google-engineer-ai-bot-sentient-blake-lemoine

2. https://en.wikipedia.org/wiki/The_Moon_Is_a_Harsh_Mistress

3. https://www.cnn.com/2022/07/23/business/google-ai-engineer-fired-sentient/index.html

4. https://www.nytimes.com/2024/03/10/science/ai-learning-biology.html

5. https://www.imdb.com/title/tt2654580/

6. https://en.wikipedia.org/wiki/Blade_Runner

7. https://en.wikipedia.org/wiki/Turing_test

8. https://www.washingtonpost.com/technology/2022/06/17/google-ai-lamda-turing-test/

9. https://en.wikipedia.org/wiki/Skynet_(Terminator)

10. https://en.wikipedia.org/wiki/The_Bicentennial_Man

Sources

Blog #5 AI Artificial Intelligence October 2, 2023 by JM Strasser

Footnotes

1. https://www.theguardian.com/technology/2022/jun/12/google-engineer-ai-bot-sentient-blake-lemoine

2. https://en.wikipedia.org/wiki/The_Moon_Is_a_Harsh_Mistress

3. https://www.cnn.com/2022/07/23/business/google-ai-engineer-fired-sentient/index.html

4. https://www.nytimes.com/2024/03/10/science/ai-learning-biology.html

5. https://www.imdb.com/title/tt2654580/

6. https://en.wikipedia.org/wiki/Blade_Runner

7. https://en.wikipedia.org/wiki/Turing_test

8. https://www.washingtonpost.com/technology/2022/06/17/google-ai-lamda-turing-test/

9. https://en.wikipedia.org/wiki/Skynet_(Terminator)