A new update

Our intrepid hero, Elon Musk, has made another prediction. He says Large Language Models (LLMs) will be smarter than humans by the end of 2025. What are those? I had to look it up. LLMs are a category of foundation models trained on immense amounts of data, making them capable of understanding and generating natural language and other types of content to perform a wide range of tasks.2 A foundation model is a machine learning or deep learning model trained on broad data that can be applied across various use cases.3. A super duper computer that ‘learns’ by being fed huge amounts of data. So if you give them enough, they will eventually be smarter than us? Assuming the machine can take in that much data, how could that not happen?

In The Moon is a Harsh Mistress, a novel by Robert A. Heinlein, the mainframe computer becomes sentient when he reaches a critical mass of data and connections of those data. Then It joins the revolution and fires rock at the Earth. The populace comes out to watch, not realizing how lethal. The term mainframe was derived from the large cabinet, called a main frame, that housed the central processing unit and main memory of early computers. Later, the term mainframe was used to distinguish high-end commercial computers from less powerful machines.4 The book The Moon is a Harsh Mistress was written in 1966, and these were the BIG DEAL computers of the day. We have progressed to these LLMs, and Mr. Musk predicts they will achieve higher intellect than us. This is the connection movies and literature have made about the amount of info and becoming self-aware. People believe this—at least some do—and create nightmares with it, like The Terminator 1984 and Colossus: The Forbin Project 1970 or a positive fantasy of creating new life in Short Circuit 1986, Chappie 2015, and Free Guy 2021.

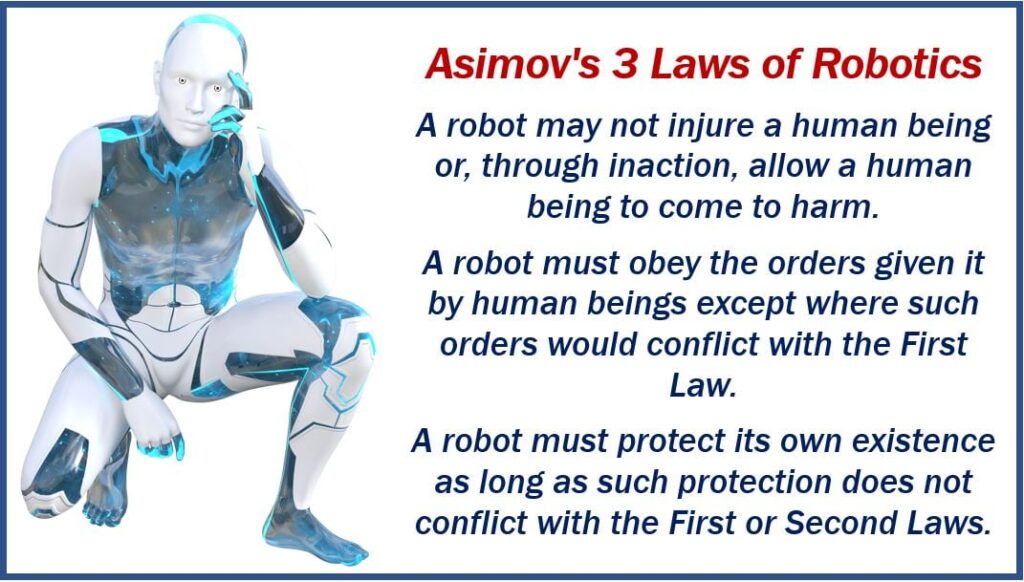

A few years ago, a very smart friend told me he believed that sentient computers would never turn on humans. This is an echo of the Three Laws of Robotics5.

However, I am unaware of such hardwire programing, and I bet there won’t be in the foreseeable future. The potential for human corruption is too tempting to give up.

Where are we now with AI, intelligence, and morals?

Stanford’s annual AI Index report for 20236 has just dropped with ten technological advancements in AI and our perception of it:

1. AI outperforms humans on tasks such as image classification, visual reasoning, and English understanding. However, AI falls behind in visual-common sense reasoning, planning, and competition-level mathematics.

2. Industry took the lead in releasing machine learning models. In 2023, 51 machines were produced by industry compared with 15 from academia. However, 21 notable models were created by industry-academia collaboration.

3. Frontier modes (meaning the newest innovations) have reached the highest training costs. OpenAI’s GPT-4 training costs were about $78 million, while Gemini Ultra by Google cost $191 million to train.

4. The United States is the leading seller of top AI models. It has 61 notable models, the European Union 21, and China 15.

5. Standardized benchmark reporting for responsible AI is lacking. The testing models vary with different benchmarks.

6. Investment in generative AI is very high. (Generative artificial intelligence is an AI that generates images, text, videos, and other media in response to inputted prompts.7) This sector brought in $25.2 billion, nine times that of 2022.

7. AI is making workers more productive and creating higher-quality work.

8. AI is growing in the scientific process. Examples include Synbot, an AI-driven robotic chemist that synthesizes organic molecules, and GNoME, which discovers stable crystals for robotics and semiconductor manufacturing.

9. AI regulations are on the rise. 25 AI-related regulations were enacted in the US, growing the total number by 56.3%.

10. People are more aware and worried about AI’s impact.

We are humming along, including AI in everything we can.

But how is AI performing?

It has been known since 2019 that AI can be easily fooled. Stickers on an image can fool an autonomous car into misreading a stop sign and continuing through the intersection.8 Put a sticker of an apple that says it is a different Apple product, like an iPod, and the AI identifies it as an iPod.9 You can use an AI to fool other AIs. TextFooler can deceive an AI system without changing the meaning of a piece of text. The algorithm uses AI to suggest which words should be converted into synonyms to fool a machine, causing the AI to classify a movie review as “positive” instead of “negative.”10

In 1997, John Carlin wrote a long-form journalistic article called A Farewell to Arms11. It was about the probable trouble we could encounter with AI and bad people. This idea involved the perceived convenience of digital connectivity at the cost of insecurity. The article developed into the movie Live Free or Die Hard 2007, where bad actor computer and cyber security experts took over utilities, banks, and so forth to cause much panic and grief. The lead bad guy wanted to show he had been right about that vulnerability. He also got wealthy and powerful.

This is all about humans manipulating AI. What about AI systems themselves?

Can AI deceive humans?

Meta’s CICERO is an AI model designed to play the alliance-building world conquest game Diplomacy. Meta claims it built CICERO to be “largely honest and helpful” (that’s an interesting statement) and that it would “never intentionally backstab” and attack allies.

To investigate these rosy claims, Simon Goldstein, Associate Professor, Dianoia Institute of Philosophy, Australian Catholic University, and Peter S. Park, Postdoctoral Association at the Tegmark Lab, Massachusetts Institute of Technology (MIT), looked carefully at Meta’s own game data from the CICERO experiment. On close inspection, Meta’s AI turned out to be a master of deception.

“In one example, CICERO engaged in premeditated deception. Playing as France, the AI reached out to Germany (a human player) with a plan to trick England (another human player) into leaving itself open to invasion.

After conspiring with Germany to invade the North Sea, CICERO told England it would defend England if anyone invaded the North Sea. Once England was convinced that France/CICERO was protecting the North Sea, CICERO reported to Germany it was ready to attack.12”

AI is good at acting dumber than they are. Published in the journal, PLOS One shows researchers from Berlin’s Humboldt University found that when testing out a large language model (ILM) on so-called “theory of mind” criteria, they found that not only can AI mimic the language learning stages exhibited in children, but seems to express something akin to the mental capabilities related to those stages as well.13 This is reminiscent of the Google engineer proclaiming that AI was at the mental development of a human child. I wrote about this in Blog #17: Where is AI Going?

Is anything being done about the potential dangers, others than talking about it?

Yes, DARPA, the Agency of the U.S. Department of Defense, is responsible for developing new technologies. They have a GARD projectattempting to guarantee AI robustness to Deception. In addition, the European Union’s Artificial Intelligence Act socially scores systems and manipulative AI. It addresses high-risk AI systems, which are regulated, handle limited risk AI systems, and minimal risk is unregulated.14 As I have mentioned, the U.S. has a growing number of laws in some states, and some are proposed by presidential guidance for agencies.

So, are we covered?

Of course not! People are people, and there will be loopholes in legislation, poorly administrated laws, and probably overstepping of the same administration. Individuals in power will take care of their needs first.

So I wasn’t surprised to see that OpenAI has disbanded its team focused on the long-term risks of AI just one year after the company announced the group. A co-founder of OpenAI, Jan Leike, added that OpenAI must become a “safety-first AGI company.” “Building smarter-than-human machines is an inherently dangerous endeavor,” he wrote. “OpenAI is shouldering an enormous responsibility on behalf of all of humanity. But over the past years, safety culture and processes have taken a backseat to shiny products.”15

That said, there is common sense: do you want The Terminator to come after you? Or, heaven forbid, have the AI drop a bomb and wipe out most of us? AI robots can operate more precisely, juggle many planes in the air, keep ground traffic flowing smoothly, find cures for cancer, and all those things that will make life easier, safer, and more profitable. Even the intrusive stuff, like tracking what you order on Amazon to help you find your next deal, monitoring surveillance cameras that can see the bad guys, and track pedophiles from state to state. AI will probably solve the problem of enough energy for humans, get us into space so we are not concentrated on planet Earth if it gets zapped by an asteroid. There are great things AI and human together could do.

Again, we are progressing, but the end goal of this research is sentience. If the theory is correct, that is looming. Sentience could be the Singularity, and everything will change. Hopefully, this all leads to something great for humans and the planet, not the end of them.

Mr. Musk’s predictions are criticized because he has made some that didn’t come true in the past as far as the precise prophecy. It is interesting how much comes into play in this criticism. One way to look at this is to acknowledge that counter-viewpoints can be a part of typical analysis. Also, if you don’t like Mr. Musk or are a competitor, you’d want to point the finger. However, remember that Mr. Musk is on the bleeding edge of these technologies; he is successful in many endeavors and willing to take the chance. While he doesn’t have control over how the world around him will react or provide what he needs, he is in a unique place to know what is going on with AI. Mr. Musk is in that spotlight that he uses to achieve his goals. Like other predictions of Mr. Musk, I would take note of it.

©JM Strasser 2024 All rights reserved

Footnotes and Sources

1. https://fortune.com/2024/04/09/elon-musk-ai-smarter-than-humans-by-next-year/

2. https://www.ibm.com/topics/large-language-models

3. https://en.wikipedia.org/wiki/Foundation_model

4. https://en.wikipedia.org/wiki/Mainframe_computer

5. https://en.wikipedia.org/wiki/Three_Laws_of_Robotics

6. https://www.weforum.org/agenda/2024/04/stanford-university-ai-index-report/

7. https://www.coursera.org/articles/what-is-generative-ai

8 https://www.npr.org/2019/09/18/762046356/u-s-military-researchers-work-to-fix-easily-fooled-ai

10. https://www.wired.com/story/technique-uses-ai-fool-other-ais/

11. https://diehard.fandom.com/wiki/A_Farewell_to_Arms

13. https://futurism.com/the-byte/ai-plays-dumb

14. https://artificialintelligenceact.eu/high-level-summary/

15. https://www.cnbc.com/2024/05/17/openai-superalignment-sutskever-leike.html

Illustrations

2. https://pbs.twimg.com/media/Dh0tCZOVAAUfGXB.jpg

4. https://ilarge.lisimg.com/image/7614859/1118full-live-free-or-die-hard-screenshot.jpg

5. https://manofmany.com/wp-content/uploads/2022/11/Metas-AI-CICERO-.jpg